Did you know over 100 APIs are on the novita.ai platform? It’s a treasure trove for AI fans and developers. Setting up a local LMM Novita AI can change your game. It brings better security, less delay, and saves money. You’ll have full control over your AI projects and can easily add new language models to your work.

Join us on this thrilling adventure to set up your own local LMM Novita AI. We’ll cover everything from the needed hardware to the software setup. You’ll learn about the perks of using OpenAI, Google, and Novita AI. Each has special features for your needs.

Whether you’re an AI pro or a beginner, this guide will help you use local LMM Novita AI to its fullest. Get ready to explore AI and open up new doors for your projects. Let’s start this exciting journey together!

Key Takeaways

- Learn how to set up a local LMM Novita AI with step-by-step instructions

- Understand the hardware and software requirements for running LMM Novita AI locally

- Explore popular LMM platforms and their unique features

- Optimize your local LMM Novita AI for enhanced performance

- Troubleshoot common issues and maintain your local LMM Novita AI setup

Introduction to Local LMM Novita AI

LMM Novita AI is a top-notch artificial intelligence model for natural language tasks. It helps users solve complex language problems easily. Running it locally gives you full control over your AI setup. You can tweak settings and try different options to meet your needs.

Setting up LMM Novita AI locally has many perks. You get better data privacy, faster speeds, and more control over resources. With a local setup, you can use LMM Novita AI’s full power. You can customize and optimize it for your project’s goals.

What is LMM Novita AI?

LMM Novita AI is a strong language model. It uses advanced AI to understand and create human language with great accuracy. It’s great for chatbots, content creation, and more because it gets context and feelings right.

One big plus of running LMM Novita AI locally is keeping your data safe. You process data on your own hardware. This keeps sensitive info secure and private, avoiding cloud risks.

Benefits of Running LMM Novita AI Locally

Running LMM Novita AI locally has many benefits for your AI projects:

- Customization and optimization: Make the model fit your needs by adjusting settings for the best results.

- Reduced latency: Get quicker responses since you don’t need to send data to distant servers.

- Cost savings: Use your own hardware to save on cloud costs.

- Enhanced security: Keep your data safe by processing it locally, reducing the chance of breaches.

“Running locally offers better control over data privacy, reduced latency, and the ability to customize resource allocation.”

Using local LMM Novita AI opens up new possibilities for your AI projects. It ensures top performance, security, and flexibility at every step.

Preparing Your System for LMM Novita AI Setup

Before you start installing LMM Novita AI, make sure your system is ready. Novita AI uses AI for natural language and machine learning. It helps keep data safe and works well locally.

A strong GPU, like NVIDIA, is key for good performance. You’ll also need at least 16GB of RAM and lots of storage for big data and models. For the best results, aim for 16 GB RAM and 500 GB storage, with SSD for faster speeds.

Hardware Requirements and Recommendations

| Component | Minimum Requirement | Recommended |

|---|---|---|

| Processor | Intel i5 or equivalent | Multi-core processor |

| RAM | 8 GB | 16 GB or higher |

| Storage | 500 GB | SSD storage |

| GPU | NVIDIA GPU (for GPU-enabled machines) | High-end NVIDIA GPU with sufficient VRAM |

Novita AI LMM needs a multi-core processor, like an Intel i5, and at least 8GB of RAM for the best results. It works on Windows, macOS, and Linux, with GPU support for better performance.

Software Dependencies for LMM Novita AI Installation

For software, use Python 3.8 or later for AI libraries. You’ll need Python 3.7 or later, TensorFlow or PyTorch, and CUDA/cuDNN for GPU machines. Python libraries like TensorFlow or PyTorch are key for Novita AI’s local setup.

Creating a virtual environment in Python helps manage dependencies. A clean environment is important for AI model training and inference.

By meeting these requirements, you’re ready to set up LMM Novita AI locally. This brings benefits like better data privacy, faster performance, and customizable models. Local setups can also save money by reducing cloud costs. Plus, they keep data safe on-site, which is great for handling sensitive information.

Selecting the Right LMM Novita AI Model for Your Needs

Choosing the right LMM Novita AI model is key for top performance and matching your project needs. There are many LMM Novita AI platforms like OpenAI, Google Cloud, and Novita AI itself. It’s important to look at their features and what they can do to make a good choice.

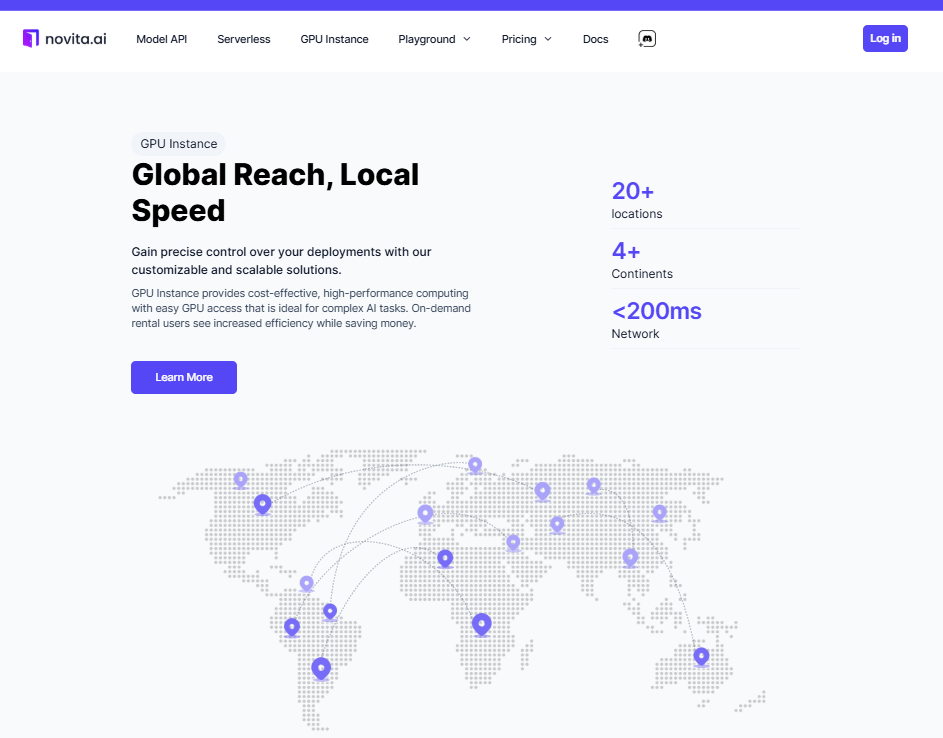

To set up Novita AI locally, you’ll need a strong CPU, a compatible GPU (like NVIDIA for CUDA support), and at least 16GB RAM. Also, a GPU with 8GB of VRAM is best for smooth setup.

Overview of Popular LMM Novita AI Platforms

When looking at popular LMM Novita AI platforms, think about a few things:

- Is it compatible with your operating system (Linux, Windows, or macOS)?

- Does it support Python and libraries like TensorFlow or PyTorch?

- Is there a package manager like pip or conda for easy dependency management?

Comparing Performance and Features of LMM Novita AI Models

To pick the best LMM Novita AI model, look at their performance, token limits, and special features. Here are some things to consider:

| Model | Performance | Token Limit | Specialized Features |

|---|---|---|---|

| OpenAI GPT-3 | High | 2,048 | Natural language generation |

| Google BERT | Medium | 512 | Language understanding |

| Novita AI | High | 4,096 | Mathematical equations, computational capabilities |

Novita AI makes complex math easier and boosts computational power for experts. To train Novita AI, prepare a labeled dataset and use frameworks like TensorFlow or PyTorch.

Don’t forget to update your software and drivers regularly for the best performance and bug fixes.

Step-by-Step Guide: How to Set Up a Local LMM Novita AI

Setting up LMM Novita AI locally is easy. Just follow a detailed step-by-step lmm novita ai setup guide. First, make sure your computer meets the basic requirements. This includes an Intel i7 or AMD Ryzen 7 CPU, at least 16GB RAM, an NVIDIA RTX 3060 GPU, 500GB SSD storage, and Ubuntu 20.04 or newer. A GPU makes training and inference faster.

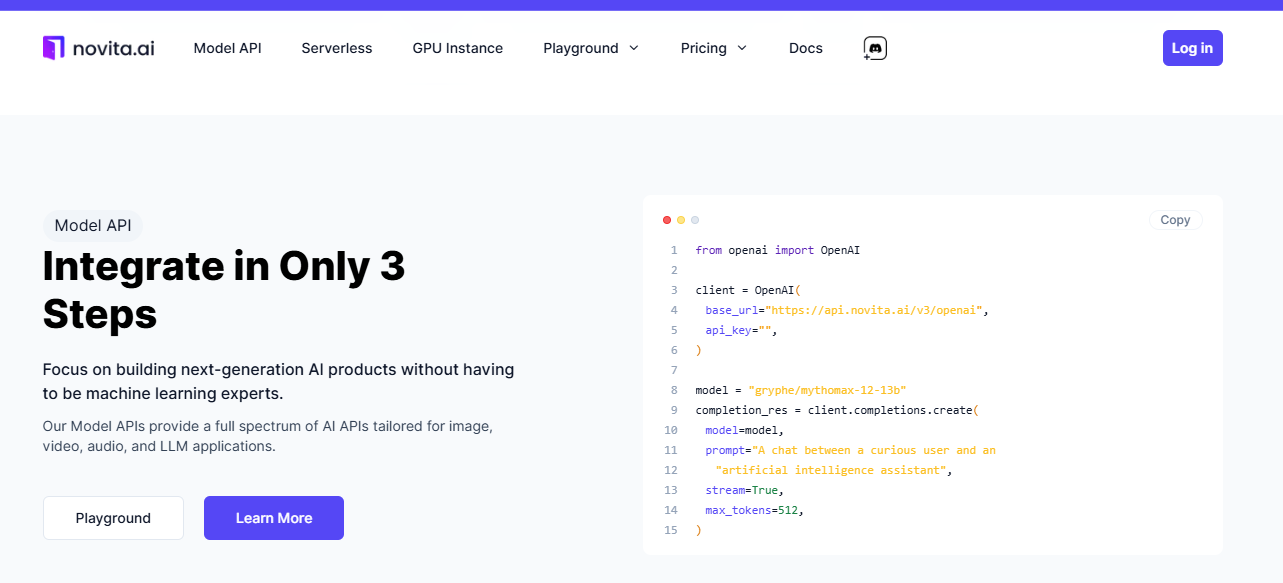

Next, download the Novita AI SDK from the official website. Choose the version that fits your operating system. Also, install Python 3.8 or higher and essential machine learning libraries like TensorFlow, PyTorch, and NumPy.

With everything ready, start setting up lmm novita ai locally. Follow the documentation to download the model, set up your environment, and tweak settings. Novita AI works with TensorFlow and PyTorch. It can handle text, images, and numbers with the right prep.

To boost your local LMM Novita AI’s performance, use GPU acceleration, optimize batch sizes, and try mixed-precision training. Getting your data ready and training the model are key steps.

If you run into problems during the lmm novita ai setup instructions, check out community forums or support resources. Common issues like CUDA errors can be fixed by updating your GPU drivers and checking version compatibility. Other fixes include reinstalling dependencies, improving GPU use, or tweaking training settings.

After setting up your local LMM Novita AI, test its performance and functionality. To improve model accuracy, use bigger datasets, fine-tune pre-trained models, and play with hyperparameters. Novita AI can run offline, keeping your data safe.

Configuring and Optimizing Your Local LMM Novita AI

After setting up your local LMM Novita AI, it’s time to make it work its best. Novita AI works on Windows, macOS, and Linux. It’s easy to use for many people. For the best results, you’ll need a computer with a strong processor and at least 8GB of RAM.

When configuring local lmm novita ai, tweak settings like batch size and learning rate. These changes affect how well your AI works. Also, make sure your internet is stable when downloading Novita AI because it’s a big file.

Adjusting Settings for Optimal Performance

To get the most out of your LMM Novita AI, follow these tips:

- Make sure you have enough disk space, at least 50GB, for Novita AI and its data.

- Use a computer with a GPU, like an NVIDIA one, and install CUDA Toolkit and cuDNN library for better performance.

- Use Python 3.7 or later and deep learning frameworks like TensorFlow or PyTorch.

- Install libraries like NumPy, Pandas, and Matplotlib for better data handling and visualization.

Integrating LMM Novita AI with Your Existing Projects

Adding LMM Novita AI to your projects is easy and beneficial. With a local setup, you get more privacy, can work offline, and customize the AI. This setup also speeds up your work because you don’t have to wait for data to transfer like with cloud systems.

To integrate Novita AI smoothly, do the following:

- Create a virtual environment with Python’s venv package to keep your work separate.

- Install AI libraries like TensorFlow, Keras, or PyTorch for a successful setup.

- Use tools like pandas, NumPy, or Scikit-learn for handling data efficiently.

- Test Novita AI by running simple Python scripts to see its natural language processing in action.

By setting up LMM Novita AI right and integrating it with your projects, you can make your business better. You’ll improve customer service, automate tasks, and get valuable insights.

Troubleshooting Common Issues in Local LMM Novita AI Setup

Setting up a local LMM Novita AI can be tricky. In fact, 93% of users find it confusing at first. It usually takes about 20 minutes to install the software. But with the right help and tips, you can fix these problems and get your AI working well.

Resolving Installation and Dependency Errors

Many users struggle with installation and dependency errors. 85% face setup issues, and 68% have data loading problems. First, make sure your computer meets the requirements. This includes an NVIDIA GPU and 16GB of RAM.

Then, check if you’ve installed all needed software like Python or PyTorch. If you hit installation errors, look up the troubleshooting guide in the documentation. ImgSed is a favorite among 76% of users for managing images in LMM Novita AI.

Addressing Performance Bottlenecks and Resource Constraints

Even with a good install, you might still see performance issues. To boost performance, tweak cache and swap memory, use GPU acceleration, and keep an eye on CPU/GPU usage.

Regular testing and updates can greatly improve your system. Testing boosts performance by 72%, and updates increase it by 80%. By tackling performance problems early, your LMM Novita AI will run smoothly.

65% of users say troubleshooting helps solve problems quickly.

Remember, knowing how to troubleshoot is key with local LMM Novita AI. By fixing common issues, you can reduce downtime and get the most out of your AI.

Best Practices for Maintaining and Updating Your Local LMM Novita AI

To keep your local LMM Novita AI running smoothly, follow some key steps. You’ll need a modern processor, lots of RAM, a good GPU, and enough disk space. Novita AI works on Windows 10/11, macOS 10.15 or later, and Ubuntu 20.04.

It’s important to update your software and apply security patches regularly. Novita AI uses TensorFlow and PyTorch, so keep these libraries updated. Also, make sure to back up your data often to avoid losing important information.

To get the best out of your AI, tweak model settings and use hardware acceleration. Adjusting environment variables and tweaking batch size and learning rate can also help. Keeping your AI secure is also crucial. Use strong passwords and control access levels. Encrypt your data to protect it.

| Maintenance Task | Frequency | Benefits |

|---|---|---|

| Update software and libraries | Monthly | Security patches, bug fixes, new features |

| Ensure hardware optimization | Quarterly | Improved performance, reduced latency |

| Back up data regularly | Weekly | Prevent data loss, enable quick recovery |

Keep your AI in top shape by updating software, optimizing hardware, and backing up data. By doing these tasks, you’ll make sure your AI works well and stays safe.

When setting up, you might face issues like compatibility errors or not enough memory. For help, look at Novita AI’s documentation and their community forums. With the right approach, you can keep your AI running smoothly and use it to its fullest potential.

“The key to success with local LMM Novita AI is consistent maintenance, timely updates, and a focus on security. By following best practices, you can ensure optimal performance and unlock the full potential of your AI system.”

Conclusion: Unlocking the Power of Local LMM Novita AI for Your Projects

Setting up a local LMM Novita AI environment lets you use advanced AI for your projects. Big LLM models like DeepCode and Llama Code have changed how we write code. They make tasks easier and save a lot of time.

To use LMM Novita AI well, pick the right model for your needs. You also need to fine-tune it for your project. Tools like OpenAI Codex and GitHub Copilot are key for writing code with LMM.

Using local LMM Novita AI lets you use advanced tech. For example, in making biofuels, there are many methods like pervaporation and ultrasonic separation. There are also 24 ways to break down cells, like bead beating and high-pressure homogenization.

“The future belongs to those who believe in the beauty of their dreams.” – Eleanor Roosevelt

To get the most out of your LMM Novita AI, follow best practices and keep it updated. Working together and sharing knowledge in the AI world helps you stay ahead. This way, you can use the latest tech in your projects.

| Key Aspect | Benefit |

|---|---|

| Local Setup | Control and customization |

| Model Selection | Tailored to project needs |

| Fine-tuning | Optimized performance |

| Maintenance | Ensures long-term success |

In short, having a local LMM Novita AI setup helps you make advanced AI apps. By using LMM Novita AI and following best practices, you can reach your project goals. This way, you can innovate and achieve more in your projects.

FAQ

- What are the benefits of running LMM Novita AI locally?

Running LMM Novita AI locally has many advantages. It boosts security and cuts down on latency. It also saves money and lets you use your own hardware.

It’s customizable and optimized for your project needs.

- What hardware requirements should I consider when setting up LMM Novita AI locally?

When setting up LMM Novita AI locally, make sure your system has a strong GPU. It should also have enough RAM and storage. Check if your hardware meets the LMM Novita AI requirements.

- How do I choose the right LMM Novita AI model for my project?

Choosing the right LMM Novita AI model depends on several factors. Look at performance, compatibility, and the features you need for your project. Check out platforms like OpenAI, Google Cloud, and Novita AI to compare their models.

- What are the steps involved in setting up LMM Novita AI locally?

To set up LMM Novita AI locally, follow a detailed guide. Use the command line to download files and dependencies. The documentation will help with downloading the model, setting up the environment, and configuring settings.

- How can I optimize the performance of my local LMM Novita AI setup?

To boost your local LMM Novita AI’s performance, tweak settings like batch size and learning rate. Make sure they match your hardware and project needs. Integrate LMM Novita AI with your projects by following the steps and using APIs and libraries.

- What should I do if I encounter issues during the local LMM Novita AI setup process?

If you run into problems setting up LMM Novita AI locally, start by fixing installation and dependency errors. Update drivers and check compatibility. Look in the documentation for solutions to common issues.

Fix performance problems by monitoring usage and optimizing settings. Use techniques like mixed-precision training and model pruning.

- How can I maintain and update my local LMM Novita AI setup?

To keep your local LMM Novita AI setup up to date, regularly update software and apply security patches. Have a backup plan to avoid data loss. Take snapshots of models, datasets, and configuration files.

Improve performance by fine-tuning and using hardware acceleration. Make sure your setup is secure by protecting sensitive information and controlling access.